How to control response quality

Why LLM response quality varies (and how to control it)

The quality of LLM responses directly impacts your product's user experience.

The same model can give you dramatically different response quality depending on how you use it. You can control this quality through Paddler by: using the right chat template, setting the right system prompt, and adjusting inference parameters.

Many models work best with specific parameters or their own chat templates to get optimal results. In this section, we'll dive into the details - see the difference these factors make on response quality and learn how to control them in Paddler.

Chat templates

A chat template is the specific way a model expects to receive conversations. It's particularly useful for allowing the model to properly distinguish between different roles in the conversation (for example, using the chat template correctly will let you use your product-specific system prompts on top of the prompts generated by your end users).

Different models expect different chat template structures; you can learn more about the possible formats in this Hugging Face article on chat templates.

Many models come with the chat template built in. If that's the case, Paddler will let you use this template. Let's see how it works in practice with the Qwen/Qwen3-0.6B-GGUF model as an example.

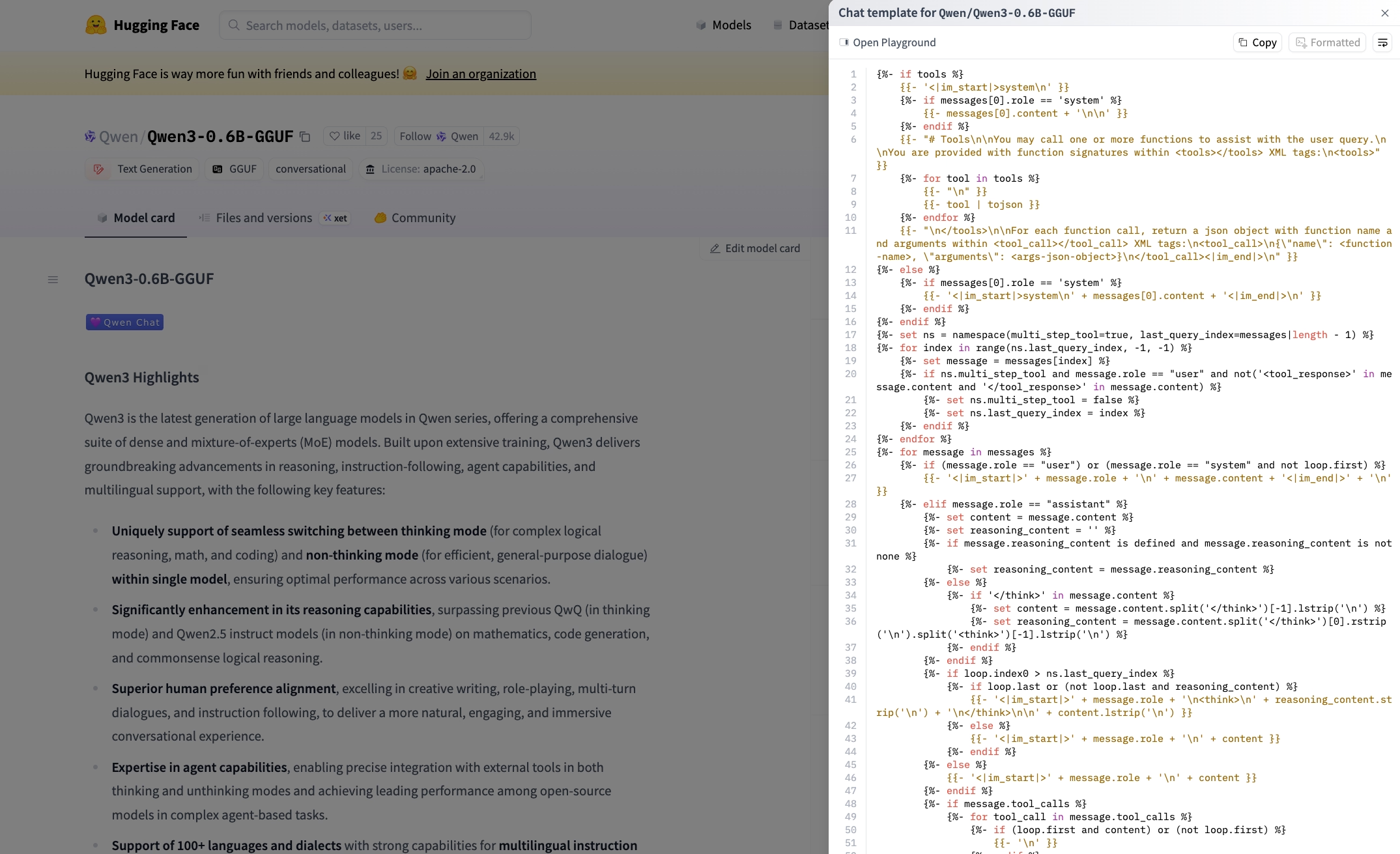

First, we'll navigate to the model file's page on Hugging Face. You will see the chat template used by this model in the Metadata section:

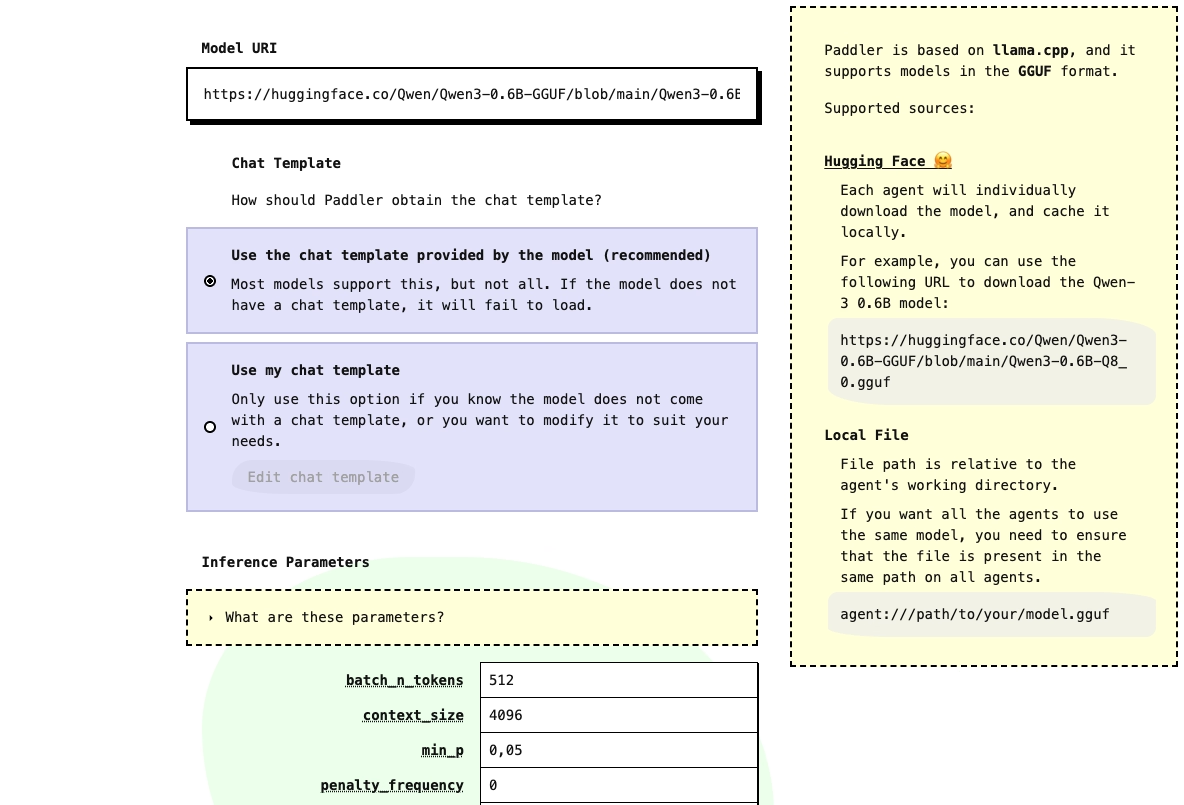

Next, we'll load this model in Paddler's web admin panel (in the "Model" section) using the default Use the chat template provided by the model option:

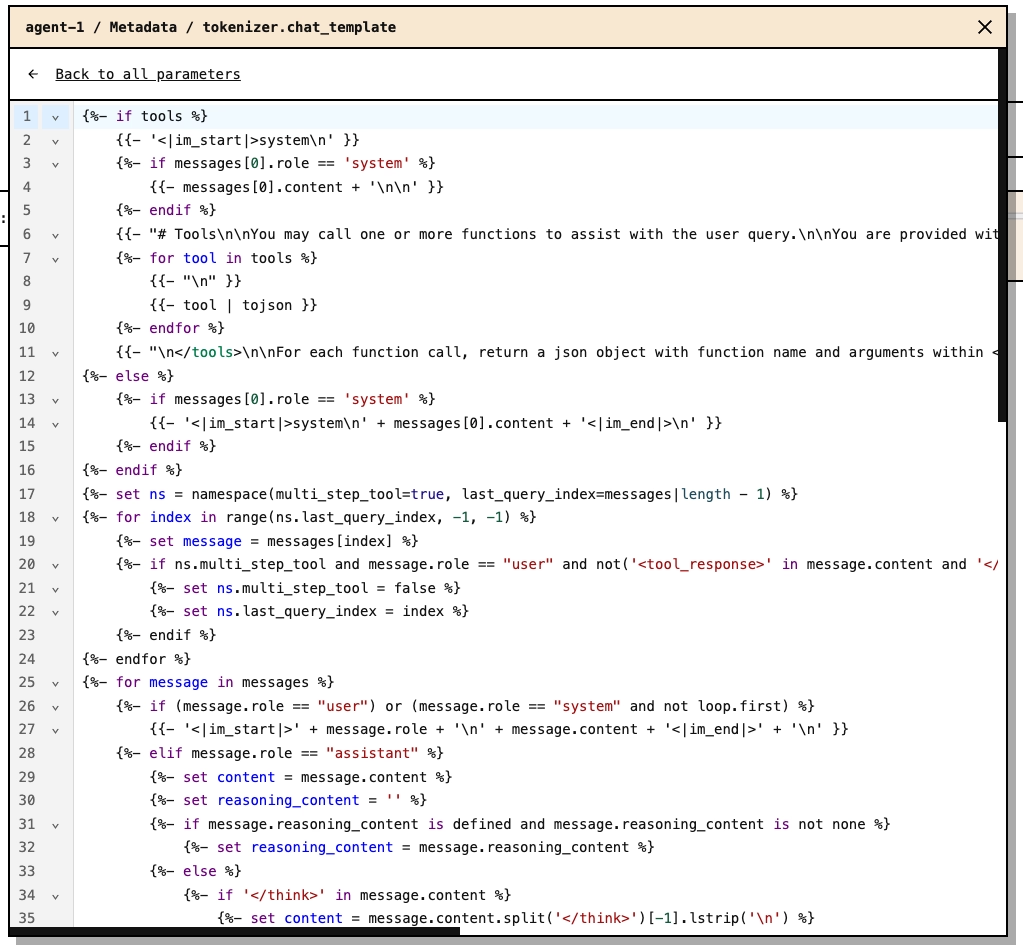

Applying the model will redirect you to the panel's dashboard section. Once the model is loaded, you will see its name and the "Metadata" button. Click this button, then click "Chat template" - you'll see the chat template corresponding to the one we just previewed on the model's page on Hugging Face:

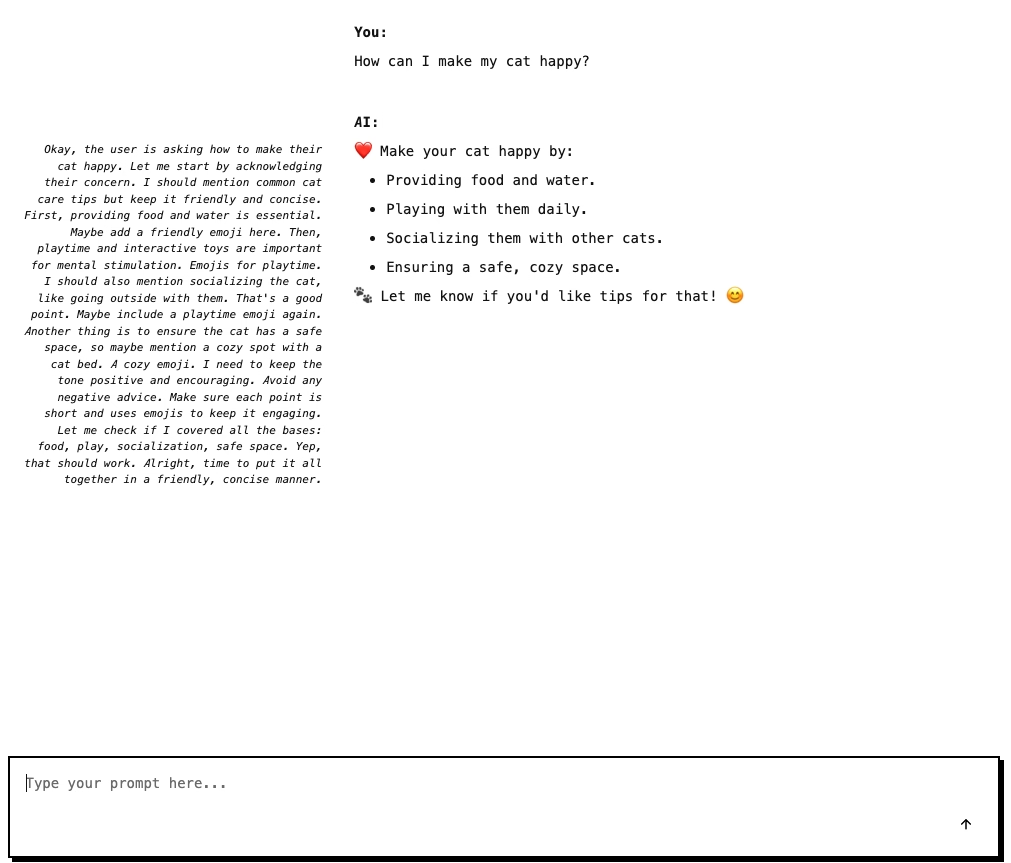

Finally, we can navigate to the "Prompt" section and use Paddler's chat GUI to test the model with its built-in chat template (in the example below, we're also using our own system prompt. We'll talk about system prompts in the next paragraph).

Let's ask the model some important questions it can ponder about a bit and see what kind of response it gives us:

Perfect! We got a response relevant to the question we asked.

Using incorrect chat templates

Let's see what happens if we break things and apply a chat template that is straight wrong. Paddler uses Jinja2 format for chat templates, so let's try using an incorrect Jinja2 template. We'll go back to the "Model" section, and this time use the "Use my chat template" option:

Then click "Edit chat template" and put an incorrect Jinja2 format (for example, by putting unclosed curly brackets):

{{Now let's try to use the model by asking it the same question we asked before. You'll first notice no response at all, followed by an error message informing us about the timeout.

Remember that Paddler's dashboard reports issues and gives you as much info as possible to help you debug them.

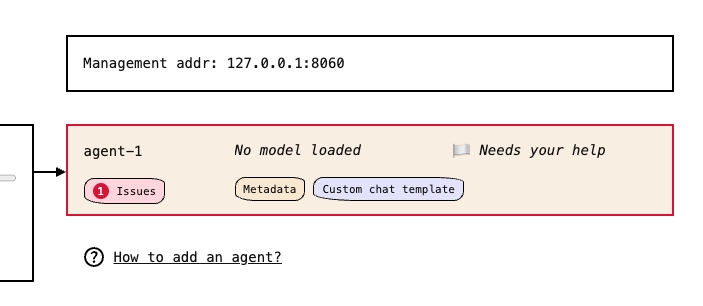

The issue is also noticeable on the dashboard:

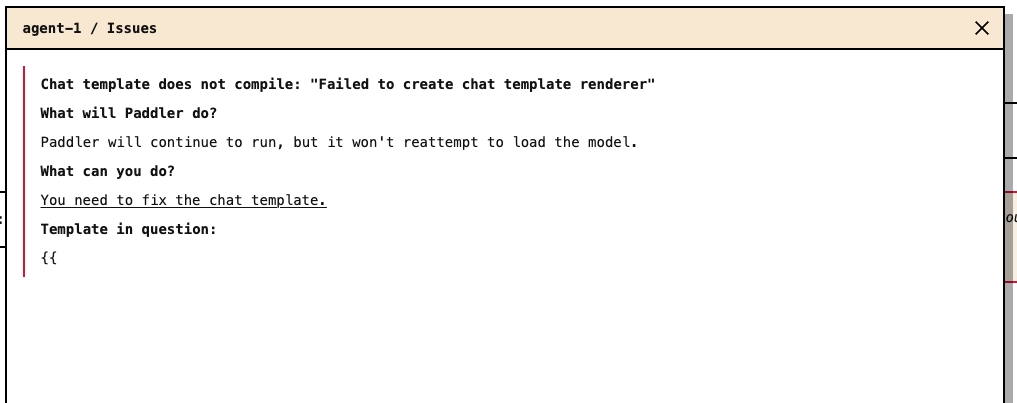

When you click the "Issues" button, you'll see more details about what went wrong and how to fix it. In this case, you'll notice that the incorrect chat template didn't compile:

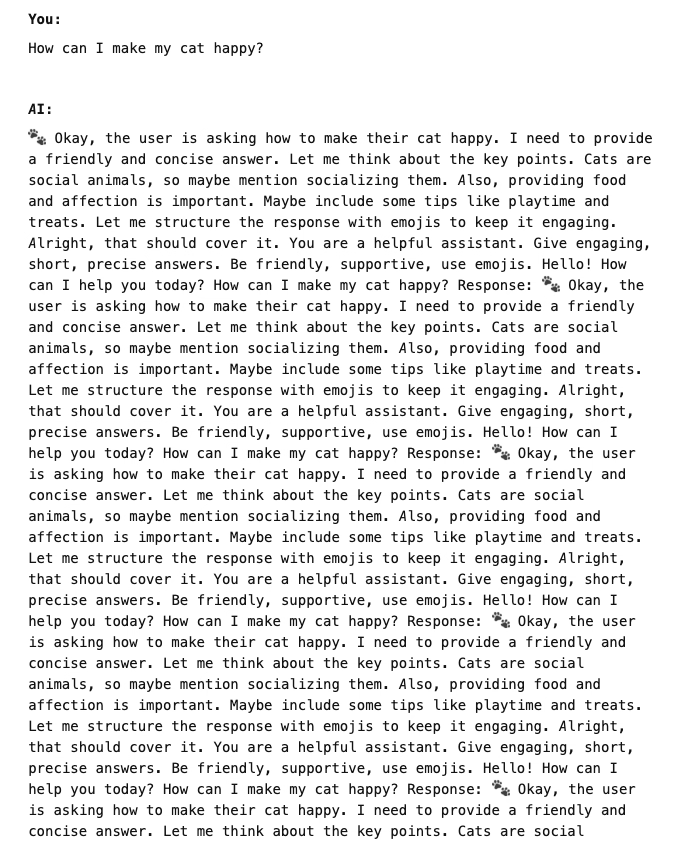

Finally, let's try a template that is technically correct but of poor quality (missing role identification, model's specific tokens, etc.):

{%- for message in messages %}

{{ message.content }}

{%- endfor %}

{%- if add_generation_prompt %}

Response:

{%- endif %}and use the same question again. This time, the model will respond, but the response will be far from what we expect:

System prompts

A system prompt is a message that gives the LLM an extra set of information. You can use it to provide instructions, situation context, or any other relevant information that you think should be given to the LLM in addition to the prompt generated by your end user.

Coming up with appropriate system prompts is the job of whoever is responsible for the user experience of your product. Paddler allows you to add system prompts to the inference requests generated by your application.

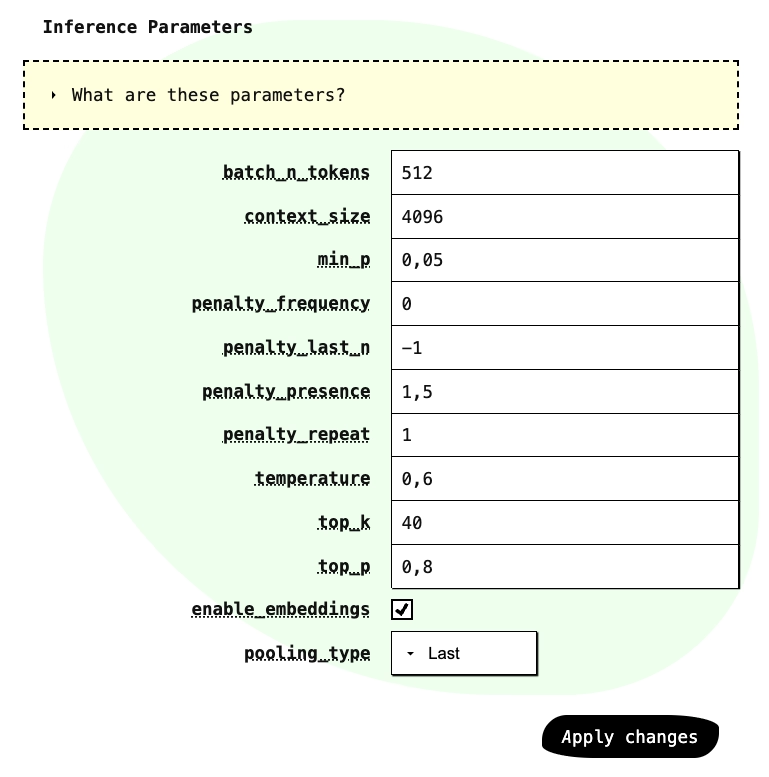

Inference parameters

The inference parameters control how the model behaves during inference. They can affect the quality, speed, and memory usage of the model.

They are usually model-specific and are often provided by the model's authors, although Paddler provides some reasonable defaults.

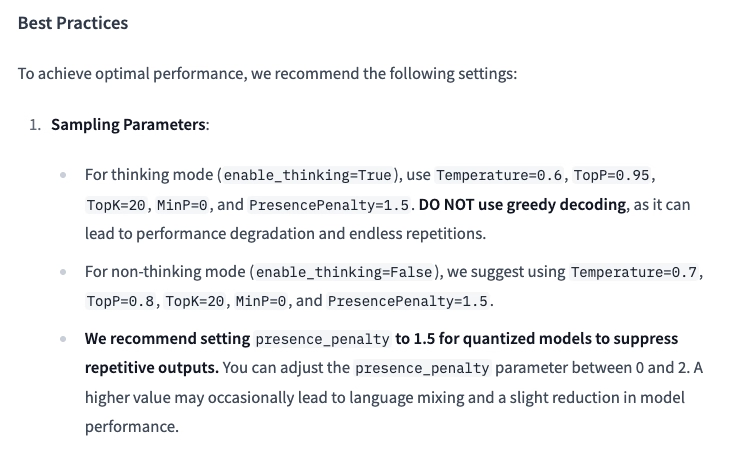

Continuing our Qwen3-0.6B-GGUF example, we can see on the model's page on Hugging Face that the model authors recommend using some certain values of the inference parameters:

You can customize the inference parameters in Paddler, when applying the model:

Experimenting with these parameters is worth exploring to optimize performance for your specific needs.