Generating tokens and embeddings

Paddler exposes both REST and WebSocket API you can use to generate tokens and embeddings. You will find detailed documentation for each endpoint in the API docs.

Let's test a few endpoints.

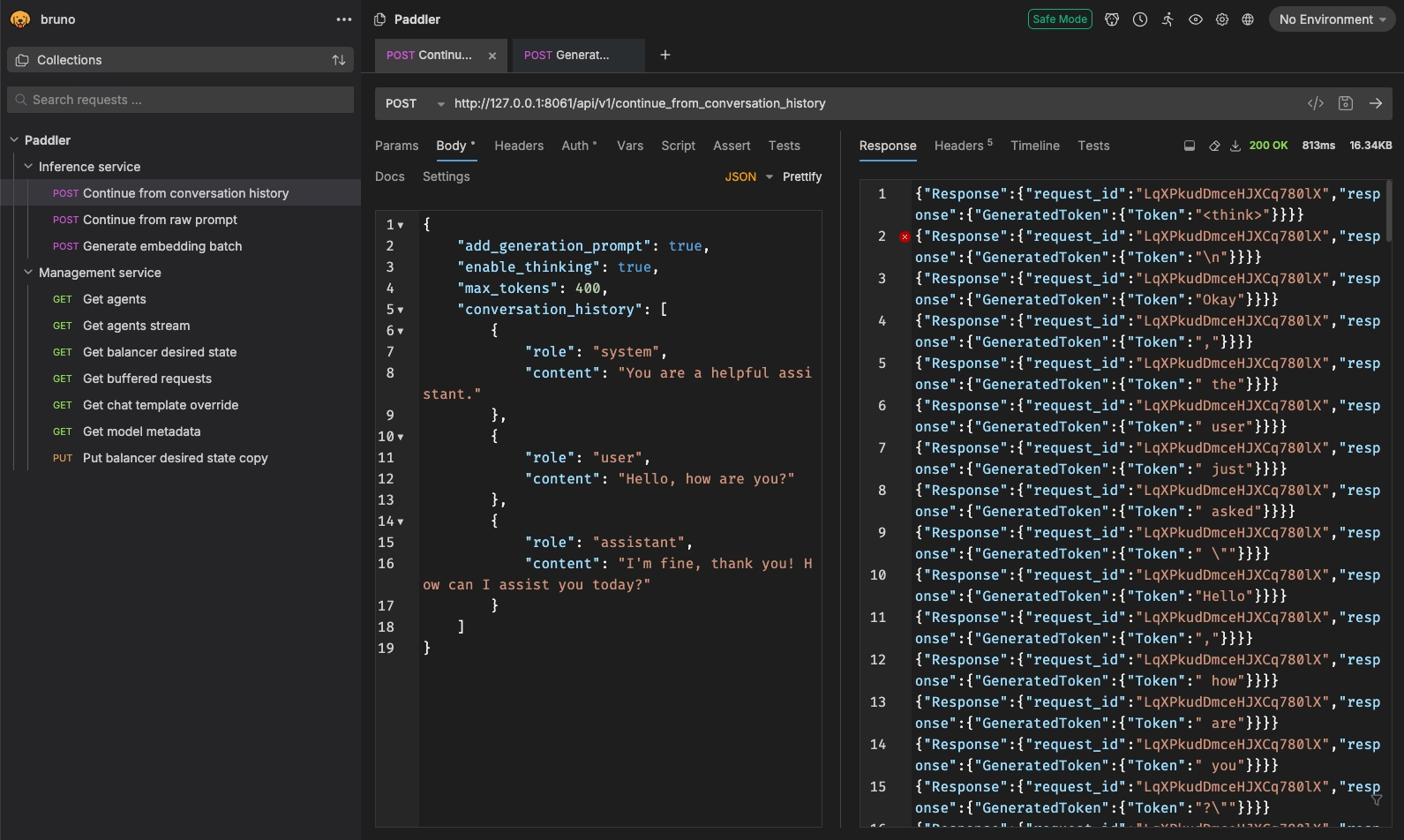

Generating tokens using the "Continue from conversation history" endpoint.

This is the primary endpoint to receive tokens. It takes your entire conversation history, uses the chat template to format a prompt, and sends you back the generated tokens. It's documented here.

An example payload may look like this:

Let's take this payload and test it with Bruno, a convenient tool to test HTTP requests:

The response presented in Bruno (right side in the screenshot above) shows how tokens in the response are returned as a stream.

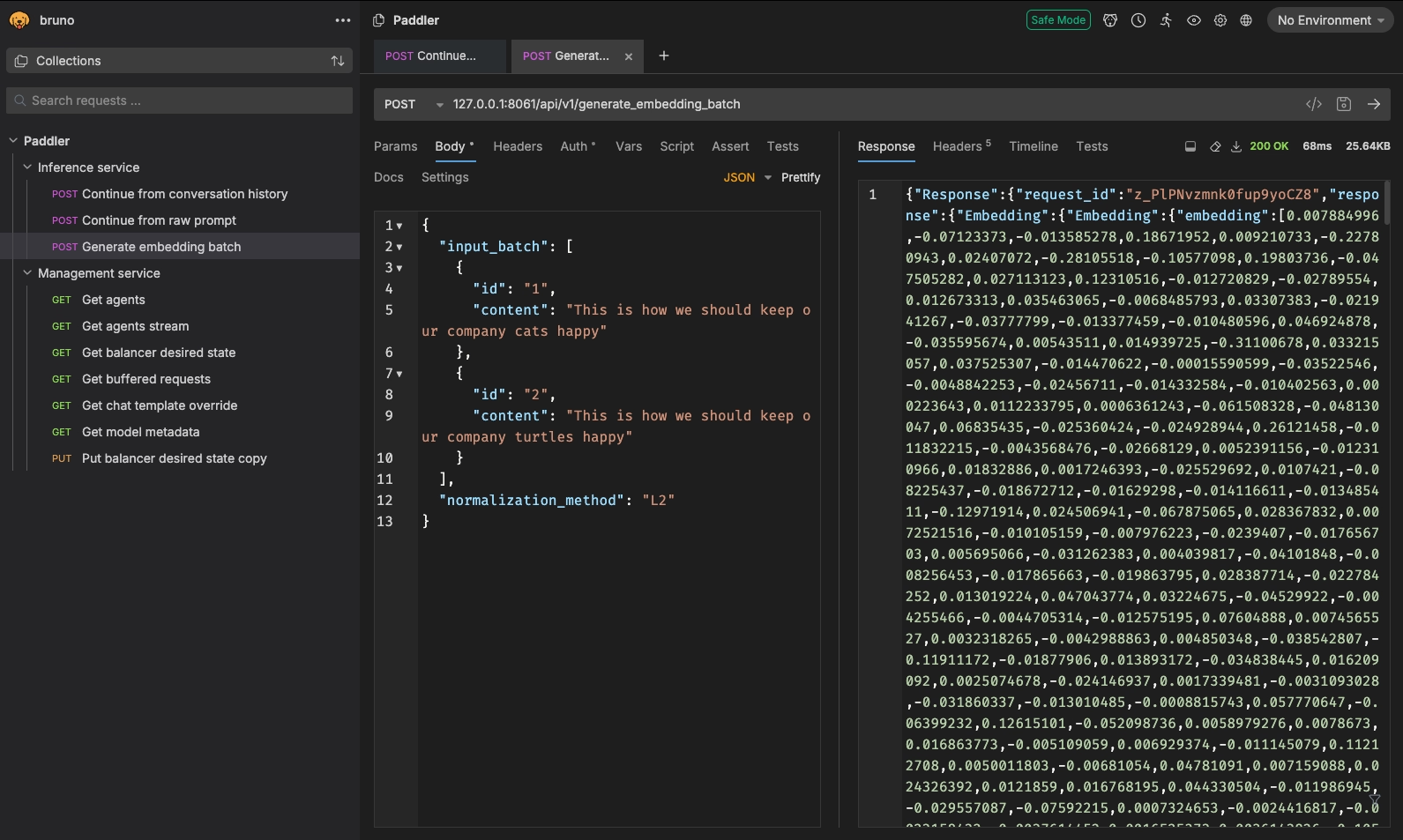

Generating embeddings using "Generate embedding batch"

Paddler also comes with an endpoint to generate embeddings, documented here.

To test it, we need first to ensure we have embeddings enabled (see: How to enable embeddings for more information).

Our example payload can be something like this:

Same as before, we will test it with Bruno. This time, we get a stream of embeddings:

Customizing the maximum time for generating a single token or embedding

You can change the maximum time of generating a single token or embedding before the generation process times out.

By default, this time is set to 5000 milliseconds. This value can be customized when running the balancer with the --inference-item-timeout flag (the value should be provided in milliseconds).